Overview

The challenge will explore the use of CV techniques for endangered wildlife conservation, specifically focusing on the Amur tiger, also known as the Siberian tiger or the Northeast-China tiger. The Amur tiger population is concentrated in the Far East, particularly the Russian Far East and Northeast China. The remaining wild population is estimated to be 600 individuals, so conservation is of crucial importance.

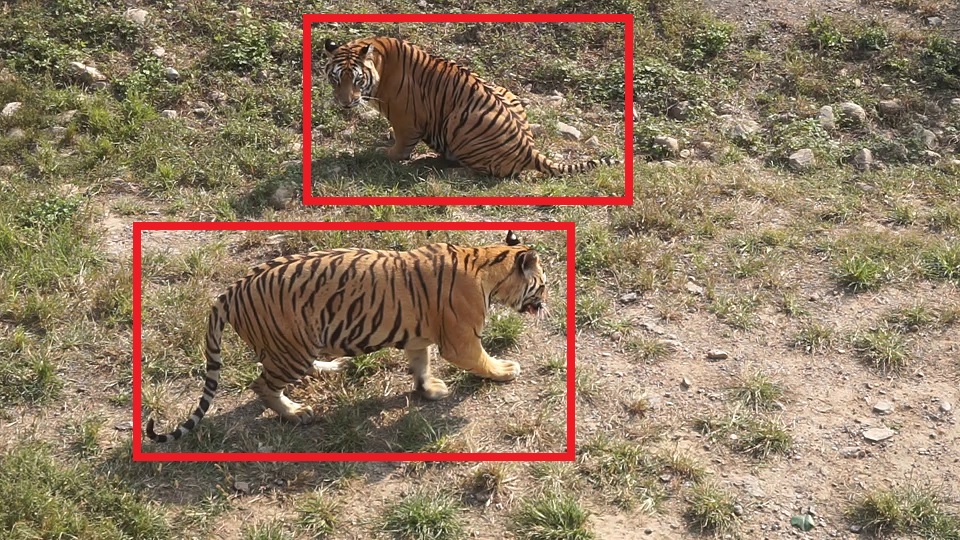

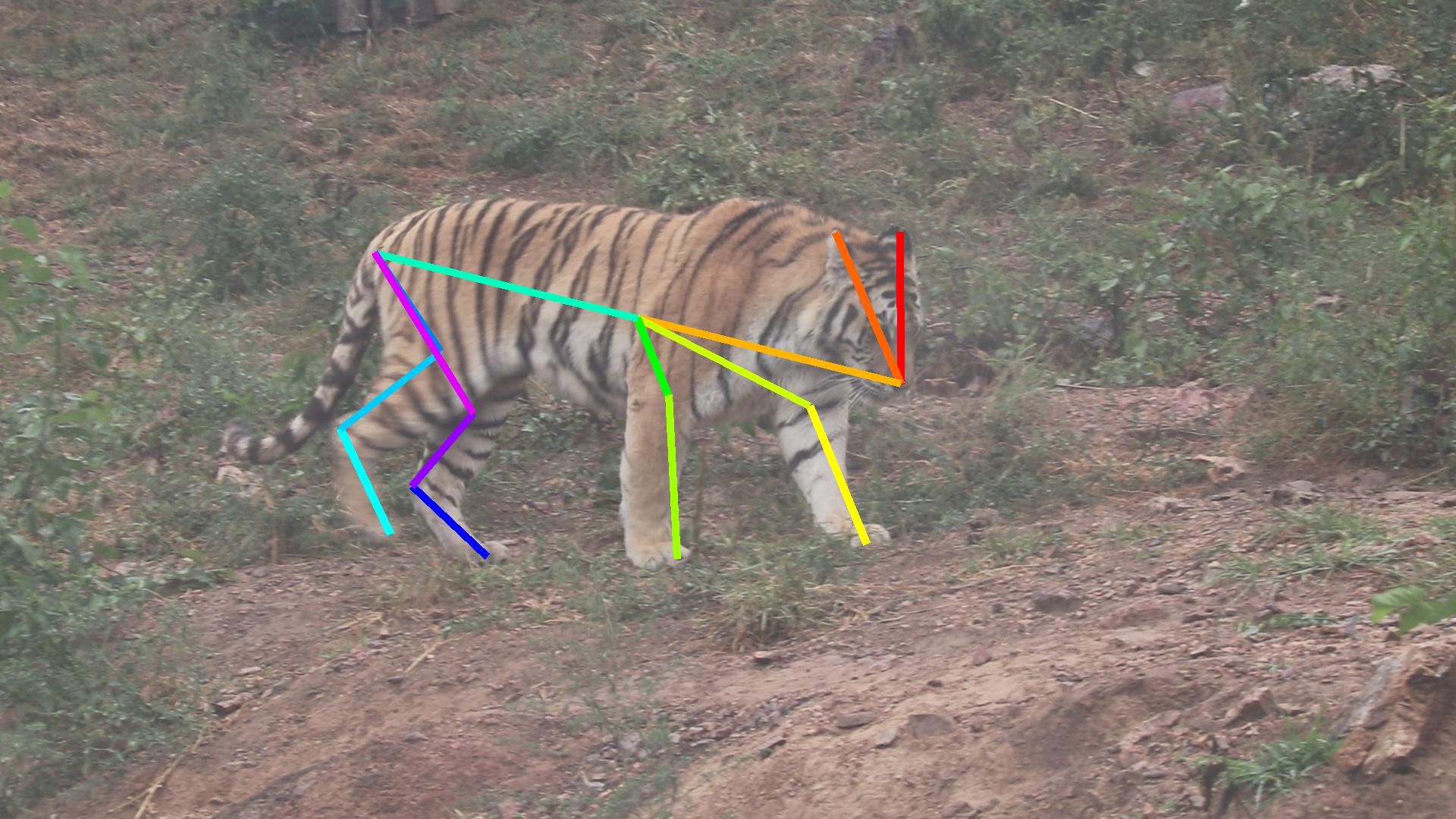

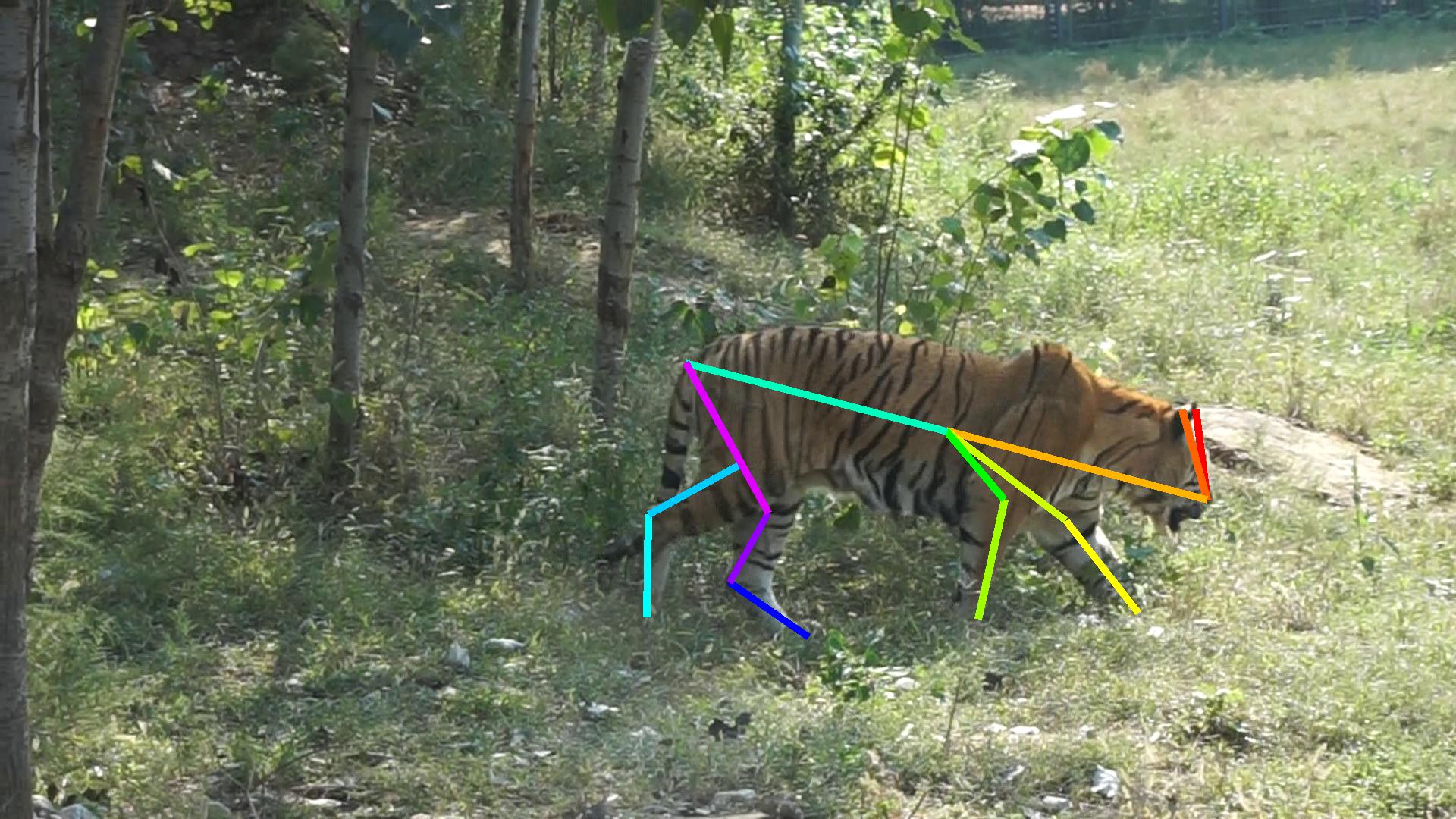

Dataset: With the help of WWF, a third-party company (MakerCollider) collected more than 8,000 Amur tiger video clips of 92 individuals from ~10 zoos in China. We organize efforts to make bounding-box, keypoint-based pose, and identity annotations for sampled video frames and formuate the ATRW (Amur Tiger Re-identification in the Wild) dataset. Figure 1 illustrates some example bounding box and pose keypoint annotations in our ATRW dataset. Our dataset is the largest wildlife re-ID dataset to date, Table 1 lists a comparison of current wildlife re-ID datasets. The dataset will be divided into training, validation, and testing subsets. The training/validation subsets along with annotations will be released to public, with the annotations for the test subset withheld by the organizers. The dataset paper is released on Arxiv: 1906.05586.

Dataset Copyright: The whole dataset is released under the non-commercial/research purposed CC BY-NC-SA 4.0 Lcense, with MakerCollider and WWF Amur tiger and leopard conservation programme team keeping the copyright of the raw video clips and all derived images.

| Datasets | ATRW | [1,2] | C-Zoo[3] | C-Tai[3] | TELP[4] | α-whale[5] |

|---|---|---|---|---|---|---|

| Target | Tiger | Tiger | Chimpanzees | Chimpanzees | Elephant | Whale |

| Wild | √ | √ | × | × | × | √ |

| Pose annotation | √ | × | × | × | × | × |

| #Images or #Clips | 8,076* | - | 2,109 | 5,078 | 2,078 | 924 |

| #BBoxes | 9,496 | - | 2,109 | 5,078 | 2,078 | 924 |

| #BBoxes with ID | 3,649 | - | 2,109 | 5,078 | 2,078 | 924 |

| #identities | 92 | 298 | 24 | 78 | 276 | 38 |

| #BBoxes/ID | 39.7 | - | 19.9 | 9.7 | 20.5 | 24.3 |

Requirement: We require that participants agree to open-source their solution to support wildlife conservation. Participants allow using pre-trained models on ImageNet, COCO, etc for the challenge. They should clearly state what kind of pre-trained models are used in their submission. Using dataset with tiger category and additional collected tiger data are not allowed. Participants should submit their challenge results as well as full source-code packages for evaluation before deadline.

Tracks

Tiger Detection: From images/videos captured by cameras, this task aims to place tight bounding boxes around tigers. As the detection may run on the edge (smart cameras), both the detection accuracy (in terms of AP) and the computing cost are used to measure the quality of the detector.

Tiger Pose Detection: From images/videos with detected tiger bounding boxes, this task aims to estimate tiger pose (i.e., keypoint landmarks) for tiger image alignment/normalization, so that pose variations are removed or alleviated in the tiger re-identification step. We will use mean average precision (mAP) and object keypoint similarity (OKS) to evaluate submissions.

Tiger Re-ID with Human Alignment (Plain Re-ID): We define a set of queries and a target database of Amur tigers. Both queries and targets in the database are already annotated with bounding boxes and pose information. Tiger re-identification aims to find all the database images containing the same tiger as the query. Both mAP and rank-1 accuracy will be used to evaluate accuracy.

Tiger Re-ID in the Wild: This track will evaluate the accuracy of tiger re-identification in wild with a fully automated pipeline. To simulate the real use case, no annotations are provided. Submissions should automatically detect and identify tigers in all images in the test set. Both mAP and rank-1 accuracy will be used to evaluate the accuracy of different models.

Awards

The workshop will provide awards for each challenge track winner team thanks to our sponsor's generous donation. Detailed award info will be available soon.

Dataset Download

| Track | Split | Images | Annotations |

|---|---|---|---|

| Detection | train | Dectection_train | Anno_Dectection_train |

| test | Detection_test | - | |

| Pose | train | Pose_train,Pose_val | Anno_Pose_trainval |

| test | Pose_test | - | |

| Plain Re-ID | train | ReID_train | Anno_ReID_train |

| test | ReID_test | Anno_ReID_test(Keypoint ground truth + test image list) | |

| Re-ID in the Wild | test | same as detection test set | - |

Format Description

- Detection: Data annotaiton in Pascal VOC format. Submission in COCO detection format. Training with the given training set and testing set will be provided in the test stage.

- Pose: Both data annotaiton and submission are in COCO format. Training with the given training set and testing set will be provided in the test stage.

- Plain ReID: Dataset contains cropped images with manual annotaetd ID and keypoints. Submission should be a json file in the following format:

- ReID in Wild: This task aims to evaluate the performance of Re-ID in a full automatical way.

Paritipants require to build tiger detector, tiger pose estimator, and re-ID module based on the provide training-set, and integrate them as a full pipeline

to re-identification each detected tiger in a set of wild input images.

The test-set is the same as that of the detection task. The re-ID evaluation will use all the detected boxes as "gallery", while the other procedure is smilar to the plain re-ID case. Submission should be a json file with the following schema:

{

"bboxs":[bbox],

"reid_result":[

{"query_id":int, "ans_ids":[int,int,...]}

]

}

where

bbox{

"bbox_id": int, #used in reid_result

"image_id": int,

"pos": [x,y,w,h] #x,y is the top-left coord, all in pixels.

}

where the 'reid_result' is almost the same format as in Plain ReID, with only 'id' replaced by 'bbox_id'. To make the evaluation tackable, partcipants require to make the 'bbox_id' unique in their submission file not only in each test image, but also among all images in the test-set.

[

{"query_id":0,

"ans_ids":[29,38,10,.......]},

{"query_id":3,

"ans_ids":[95,18,20,.......]},

...

]

where the "query_id" is the id of query image, and each followed array "ans_ids" lists re-ID results (image ids) in the confidence descending order.

Similar to most existing Re-ID tasks, the plain Re-ID task requires to build models on training-set, and evaluating on the test-set.

During testing, each image will be taken as query image, while all the remained images in the test-set as "gallery" or "database", the query results should be rank-list of images in "gallery". The evaluation server will separate the test-set into two cases: single-camera and cross camera (see our arxiv report for more details) to measure performance. The evaluation metrics are mAP and top-k (k=1, 5).

Evaluation

Evaluation server is now opened. Thanks EvalAI for the support. Note please choose correct track (or phase) during results submission.

Since Evaluation Server expired, for future research, we release the ground truth and evaluation scripts to public. Those can be found at our github repo.

FAQ

- Q: Do we require registration to attend?

A: No. But, you are required to register to submit results on the evaluation server, which will be released soon. - Q: Detection/keypoint format examples?

A: Please refer to this file for detection and this file for keypoint. Note that the keypoint definition is different in COCO and our datasets, please make corresponding changes. - Q: In plain reID task, 3392 cropped images not match to 1887 annotations in training set?

A: The annotated images include three cases: left/right sides, and frontal tiger face. Our released annotation only contains left/right side pose annotation of tigers since tiger re-id is based on stripe pattern rather than tiger face. For the challenge, please build system based on the annotation file. - Q: Could you provide evaluation tool?

A: We are preparing and testing the evaluation server, which should be ready before we release testing dataset. Please keep an eye on our website for the update. For now, you can use following scripts to test on validate set.

-Detection: standard COCOApi (python ver.).

-Keypoint: modified COCOApi (python ver.). Please change the 'sigmas' in L206 or L523 (depends on the cocoapi version) of cocoeval.py according to our paper (in which squared sigmas are listed).

-Plain ReID: Please refer to evaluate.py of this repo. As the frame excluder are not open to public, the evluation results may tend to be optimistic. - Q: What is difference between open testing leaderboard, and final evaluation leaderboard?

A: We adopt similar strategy as some kaggle challenges. During open testing, we randomly select half samples (fixed) from testset for evaluation, and open this leaderboard to public. The final leaderboard will be based on the evaluation over the whole testset, which will be released to public after the challenge. - Q: How many submissions per day during testing phase?

A: Each participant team will allow at most 4 submissions per day per track. Each participant team requires to include information for all team members (affiliation and email) during evaluation server registration. Multi-account submission from one team is not allowed. Once detected, performance from those accounts will not be taken into consideration for final leaderboard. - Q: How to submit source codes per the requirement, and when?

A: Please create an online repository like github or bitbucket to host your codes and models. The organizer will query the repository link for each team after the testing phase finished. Teams should open their source codes and models before challenge result notification deadline (August 9, PST time). Team without such information will not be considered in the final ranking. - Q: Why evaluation server only evaluate the accuracy for the detection track?

A: Due to evaluation server limitation, the detection track will only evaluate and rank by accuracy (mAP etc). After final submission, we require each team to submit their run-time cost (FLOPs and model-size) information. We will build a metric like performance-per-FLOPs (PPF) to rank submissions in this track. - Q: How to indicate which submission is final?

A: You may add a postfix like "final" into your final submission name to indicate that. If you do not indicate that, we will by default use your best performed submissions in the test-dev evaluation as the final submission.

Important Dates

- Training data release: June 28, 2019

- Testing data release: July 26, 2019

- Result submission deadline: August 2, 2019

- Result notification: August 9, 2019

- Challenge paper submission: August 15, 2019

- Acceptance notification: August 23, 2019

- Camera Ready: August 30, 2019

- Workshop: October 27, 2019

References

- K Ullas Karanth, James D Nichols, N Samba Kumar, et al. Tigers and their prey: predicting carnivore densities from prey abundance. PNAS 101, 14 (2004),4854–4858.

- K Ullas Karanth, James D Nichols, N Samba Kumar, and James E Hines. Assessing tiger population dynamics using photographic capture–recapture sampling. Ecology 87, 11 (2006), 2925–2937.

- Alexander Freytag, Erik Rodner, Marcel Simon, Alexander Loos, et al. Chimpanzee faces in the wild: Log-euclidean cnns for predicting identities and attributes of primates. In German Conference on Pattern Recognition, 2016.

- Matthias Körschens, Björn Barz, and Joachim Denzler. Towards automatic identification of elephants in the wild. arXiv preprint arXiv:1812.04418(2018).

- Andrei Polzounov, Ilmira Terpugova, Deividas Skiparis, and Andrei Mihai. Right whale recognition using convolutional neural networks. arXiv preprint arXiv:1604.05605(2016).

Contact: cvwc2019 AT hotmail.com. Any question related to the workshop such as paper submission, challenge participation, etc, please feel free to send email to the contact mailbox.